Around 93% of online experiences start with search engines as per Junto. Therefore, digital marketing services in the USA use SEO tactics to increase online business presence and target potential customers for businesses. These tactics include writing content, optimization of website elements, choosing the right marketing channels and much more. But there is also another element which can be used for effective SEO. That is the robots file.

Table of Contents

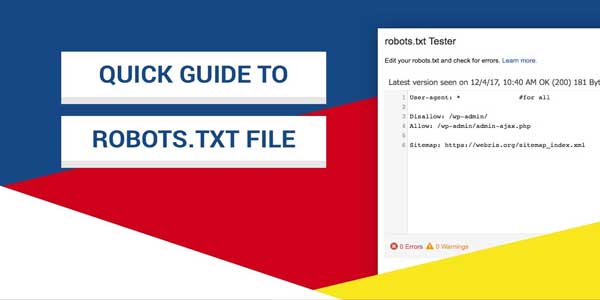

Robots File:

Robots file or robots.txt file is a simple text file that is placed in the website’s root directory. This file is included in robots exclusion protocol (REP) which standardizes communication between site and search engine crawler. This file helps web robots typically known as search engine crawlers, spiders or bots to know which web pages to crawl. Accordingly, web crawlers analyze web content and rank it in search engine against target keywords.

The Functions Of Robots.Txt File:

It contains two basic instructions either:

- Allow user-agents (web crawlers) to view a file on the website

- Disallow user-agents (web crawlers) to view a file on the website

And permission is allowed by webmasters in the following manner:

User-agent: [user-agent name] Disallow: [URL string]/

How Robots.Txt File Works For SEO?

Webmasters via web and mobile application development services provide search engines crawlers with the robot.txt file. Crawlers read this file and help SEO as follows:

Crawlers when allowed to see a web media or file, crawl through that content and analyze it. They support each backlink and examine the quality of content. This practice helps them in indexing the web content on search engines so that; it is provided to internet users who are looking for this information.

If a website hasn’t robots.txt file, the crawler will proceed to read all the information on the site. If the crawler reads info not directly giving SEO value to your site, it may disturb your online ranking.

Uses Of Robot.Txt File:

This file can be used in many ways for:

- Hiding duplicate content on the search engines

- Specifying sitemap locations on the website

- Disallowing search engines to index un-wanted images and PDFs

- Preventing website’s internal search results to show in public search engine result page (SERP)

- Delaying time delay crawlers so that, web servers are not overloaded; when crawlers load several content pieces at once

How Is Robots.Txt File used for Effective On-Site SEO?

Allow Access To Important Content:

Make sure your high-quality content is visible to crawlers that help to improve your rank.

Don’t Hide Private Data:

Don’t use this file for hiding the user’s private information. Because other pages may be linked to your page via sensitive details so this data will be indexed on SERP anyhow. Therefore, protect sensitive data with password protection.

Update And Submit File Immediately:

The content of the robot.txt file is cached by the search engine and is updated once in a day. Therefore, if webmasters update this file, they can submit it to Google through URL. So that, crawlers analyze web content as per the updated version of the robots file.

Don’t Build Links On Blocked Pages:

The search engine doesn’t analyze links on pages which are blocked for its crawlers. Hence, no link equity will be passed to link destination page. So, if you want to pass equity, don’t block crawlers for viewing pages that have vital links.

Don’t Allow Access To Duplicate Data:

Don’t let web crawlers to crawl and analyze duplicate content on different web pages. For example, some websites provide print versions of blogs for their users to print. When the crawler analyzes the same content for more than once; it gets a bad impression of the website. And gets confused that which version of the site will rank in SERP?

Conclusion:

By correctly using the robots.txt file, webmasters allow search engine crawlers to analyze their website content as they want. It dramatically helps SEO and ranks websites in prominent search engine results.

Information Process Solutions is a USA-based digital marketing company that effectively provides digital marketing with the best SEO strategies for businesses.

About the Author:

About the Author:

Hi Sir,

This is very helpful informative article. actually i was googling for that kind of post. its very helpful Thanks for sharing it.

Welcome here and thanks for reading our article and sharing your view.

Can you explain to me the default robots.txt file for wordpress?

Welcome here and thanks for reading our article and sharing your view.

Nice blog and extremely wonderful. You can do impressive much better but I still say this perfect. Keep trying for the superlative.

Welcome here and thanks for reading our article and sharing your view.

Nice article, It was great to learn about Robots.txt file to become seo hero.

Welcome here and thanks for reading our article and sharing your view.